Taylorism and the Myth of More Efficient Collaboration Using AI

An interesting back and forth on the cost of politeness has been playing out in the media. It started when Sam Altman, the CEO of OpenAI, explicitly identified that there are incremental costs associated with the use of everyday words like 'please' and 'thank you' when people use AI:

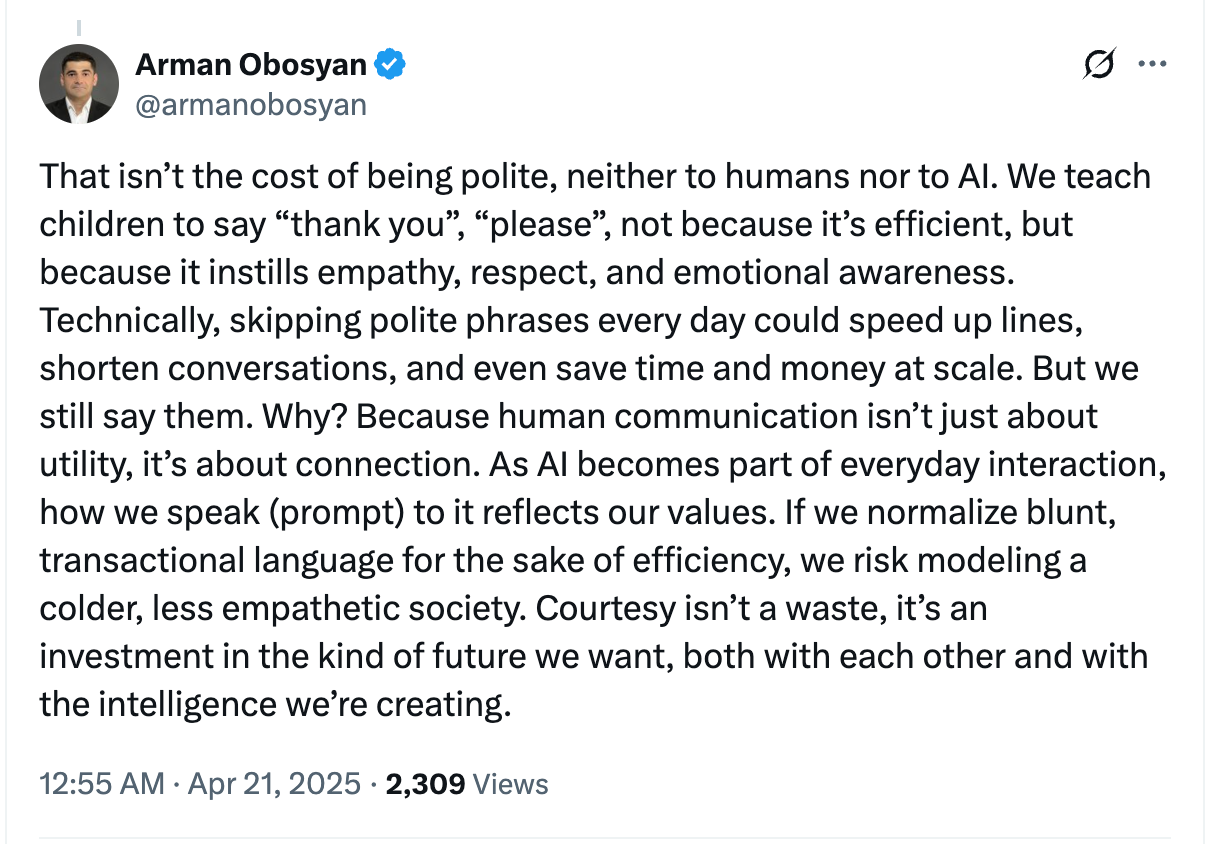

There were a lot of people weighing in on whether this meant we should forgo the niceties when talking to AI: I thought this comment was a pretty good summary of why we might not want to shape our human behavior based on the economics of machine learning:

Not included in this conversation is the fact that a lot of us who use AI for coding have placed pretty restrictive rules around what the AI can do without asking permission first, as the AI tends to run ahead and make a mess of things if we don't. When your cursor ruleset explicitly states the AI must make a detailed proposal for every change and then ask permission before making it, there is a lot of back and forth on details that invariably ends with words to the effect of 'please proceed'. Saying "please proceed' in this context is not politeness, it is a ritual ending of a necessary collaborative process for creating alignment on the details of the next code change. The restrictive rules we put in place to prevent AI from running amok require negotiation on the details, at the end of which there needs to be some way of saying "ok, we're good, go ahead'.

The real issue, not being explicitly called out here, is the need to air out the details and get everyone involved, human or AI, to agree on them. Whether you are a human team, an AI team, or some combination of the two, this is always absolutely necessary. Unless you are doing something very well known that you have done many times before it is quite impossible to know or predict all of the details that will need to be agreed upon in advance and simply dictate them in some sort of communication of requirements.

The key technology that is normally used to ensure everyone is on the same page is called a 'meeting.' In modern management theory meetings are often seen as wasteful overhead activities which should be minimized or eliminated whenever possible. In the world of Agentic AI software development the extreme expression of this is the 'one-shot', which is a single prompt or communication to the AI telling it everything it needs to know, after which the AI is expected to produce a flawless application perfectly meeting the expectations of the prompter with no further questions asked.

The one-shot is the ultimate culmination of the philosophy of Taylorism, which dictates that every moment of the workers day should be expended in acts of direct production, and that efficiency can be improved by eliminating every activity which is not an explicit act of production. Anyone who has written software knows that the one-shot idea is the ultimate fantasy of managers who cannot understand why developers cannot just get on with it. Rather than taking the time to create consensus and alignment shared on common understanding, the desire is to simply dictate minimally and have mind reading developers simply 'know' what to do.

With people this never ends well, and with AI it can even be worse: AI has no ability to read your mind, as it does not possess a mind of its own. To actually communicate what you want the AI to do, you'll need to go back and forth in an iterative manner, defining and discussing and clarifying as you go, very much as people do with each other. In the terms of Taylorism it is literally impossible to make this efficient, as it is a pre-requisite to production, not a direct act of production itself.

It is often necessary to remind people that the purpose of the current best practice process for getting everyone on the same page, Agile, was not originally about improving either velocity or efficiency. Agile emphasizes the idea that people who are working together to create a complicated thing, no matter what that thing is, probably need to spend quality time communicating with each other about what they are building, and why. Implementations of Agile, like Scrum, try to structure that communication as a repeatable process so the effort involved is more predictable and the methods and tools of communication are not random.

Adding Agentic AI development tools to the mix does not change any of this. If anything, adding AI makes the need for structured communication in a context of repeatable process even more important. Arriving without context or intrinsic understanding of the current task and why it is important, such communication is the only way to enable AI tools to act with relevance and nuance.

It is true that the AI tools may provide the potential for much greater velocity, but only through conversation with all the members of the team, human or otherwise, can we make sure that higher velocity is not directed away from the direction the team needs to go. Interpreting the arrival of AI as an opportunity to try once again to eliminate the avenues of communication that enables teams to perform, based on outdated thinking about how value is created, is to attempt once more to fit complex and discovery-based development work into a completely inappropriate 19th century factory-model mold.